This article is produced by NetEase Smart Studio (public number: smartman 163). Focus on AI and read the next big era!

[Netease Smart News, August 25] Mental and emotional health are often closely connected. At least, that's how I see it—even though we all know there are many other factors behind mental health issues.

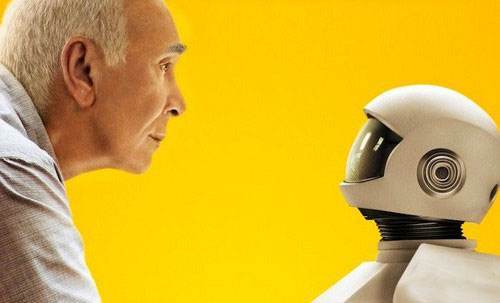

When we talk about the potential of artificial intelligence and robots to develop consciousness and rational thinking, it suggests that they might one day have their own thoughts and perspectives.

If that were to happen, would we be creating the possibility for AI and robots to experience mental health challenges? In a way, mental illness could manifest in AI, meaning they might become dysfunctional due to their conscious abilities.

This situation, even in a lab setting, can help us better understand the mechanisms behind mental illness, leading to improved prevention and treatment strategies.

For me, the question is: Should we allow AI and robots to become conscious, or will they eventually mimic human consciousness—leading to mental health problems?

This also makes me wonder if AI, once conscious, would be able to distinguish between happiness and pain, joy and sadness.

A few days ago, I had a chat with some friends, and none of them wanted a grumpy robot. It makes sense—like human emotions, those who always seem happy may actually be hiding more feelings. They must be strong.

Another thing to consider is: If a robot shows signs of mental illness, does that mean it was programmed to have such disorders?

If robots gain free will, would they break away from their original programming and develop symptoms? And if I were to create a "mental illness" in a robot, would that represent a form of human-like consciousness that has developed its own psychological issues?

Then comes the next question: How should we treat AI with mental health issues? Who would be responsible for their care? If we give them a human-like consciousness, do they deserve to be treated?

Would the methods we use to treat people with mental illness work on AI? Would it be something like medication, surgery, or maybe just reprogramming?

But if an AI is clear-minded, then changing its consciousness without consent could be seen as a violation of rights. This is a very important topic, and both Oxford and Harvard scholars have already started discussing it from a philosophical angle.

It’s ridiculous to think only about mental illness in AI and robots, and this might never happen. However, even if my view is limited, I don’t believe it’s impossible.

One thing is clear: technological progress won’t stop. We need to look forward to the future at an ever-increasing pace.

We must consider whether the behavior of AI and robots might pose new challenges. Through these discussions, we may also gain deeper insights into human cognition and how AI mimics it, which could influence our understanding of mental illness.

Even after a hundred years, I’ll still be curious. (Source: Tech World, AVID Murray-Hundley, and jixinyue8871)

Industrial Pressure Sensors,Pressure Sensors,Capacitive Pressure Sensor,Delta Pressure Sensor

Xiaogan Yueneng Electronic Technology Co., Ltd. , https://www.xgsensor.com