This article is produced by NetEase Smart Studio (public number: smartman 163). Focus on AI and read the next big era!

[Netease Smart News, August 25] Mental health challenges are often closely linked to our emotional states. While I know there are many other factors that contribute to mental health issues, I believe that the relationship between mind and body is more complex than we think.

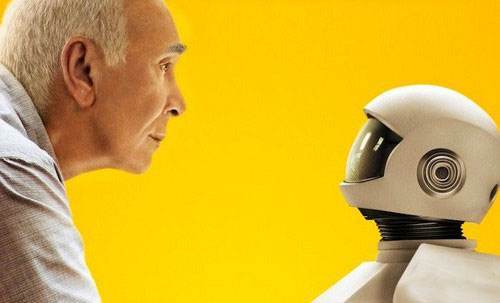

When we talk about the potential of artificial intelligence and robots to develop consciousness and rational thinking, it implies that they may one day have their own thoughts and feelings. If that happens, could they also suffer from mental illness? In a way, if AI becomes conscious, it might experience dysfunction similar to human mental disorders.

This situation, even in a controlled lab setting, could help us better understand the mechanisms behind mental illnesses, leading to new ways of prevention and treatment. It's not just about AI—it's about deepening our understanding of consciousness itself.

For me, the question is: Should we allow AI and robots to become conscious? If they do, will they eventually develop mental health issues like humans? And if they can feel emotions such as happiness or pain, what does that mean for their "well-being"?

A few days ago, I had a conversation with some friends, and none of them wanted a grumpy robot. That makes sense—just like people, those who always seem happy often hide more emotions. There’s a certain strength in that.

Another thought: If a robot shows signs of mental illness, was it programmed to be that way? If robots have free will, could they break away from their original programming and develop symptoms? Would that be a sign of true consciousness, or just a malfunction?

Then comes the question: How should we treat AI that exhibits mental health issues? Who would be responsible for their care? If we give them human-like consciousness, do they deserve the same rights as humans? And if we reprogram them without consent, are we violating their autonomy?

Would traditional methods of treating mental illness—like medication or therapy—apply to AI? Could we “recode†their minds instead? But then again, is that ethical? This is a topic that philosophers at Oxford and Harvard have already started to explore.

It might sound absurd to think about mental illness in AI, and maybe it never will happen. But I don’t think that’s the point. The real issue is how we prepare for the future, and how we define consciousness, emotion, and well-being in machines.

Even if my perspective is limited, I believe one thing is clear: technological progress won’t stop. We need to look ahead, and think deeply about what it means to create intelligent life.

We must ask ourselves whether AI behaviors could lead to problems—and through these questions, we might gain new insights into human cognition and mental health. After all, understanding AI might help us understand ourselves better.

One day, I’ll be gone, but I’ll still be curious. (Source: Tech World, AVID Murray-Hundley, and jixinyue8871)

Low Temperature Pressure Sensor

Low Temperature Pressure Sensor,Low Pressure Sensor,Low Cost Pressure Sensor,Low Pressure Sensor Transducer

Xiaogan Yueneng Electronic Technology Co., Ltd. , https://www.xgsensor.com